Peer review with ghclass

Therese Anders and Mine Çetinkaya-Rundel

2020-08-26

Source:vignettes/articles/peer.Rmd

peer.RmdThis vignette introduces the peer review functionality of ghclass and provides a step-by-step guide. For more information on the pedagogy of peer review in undergraduate courses, see the draft of the accompanying paper by the package authors.

Before peer review

The peer review process is initiated after a regular assignment has been distributed to students. Below, we run through a quick example of how to set up a fictional assignment “hw2” for four students using the create_repo() function. For a more detailed description of how to set up student repositories in a GitHub Organization, see the ghclass “Get started” vignette.

students = c("ghclass-anya", "ghclass-bruno", "ghclass-celine", "ghclass-diego")

repos = paste0("hw1-", students)

org_create_assignment("ghclass-vignette", repo = repos, user = students,

source_repo = "Sta323-Sp19/hw1")Step-by-step guide to peer review

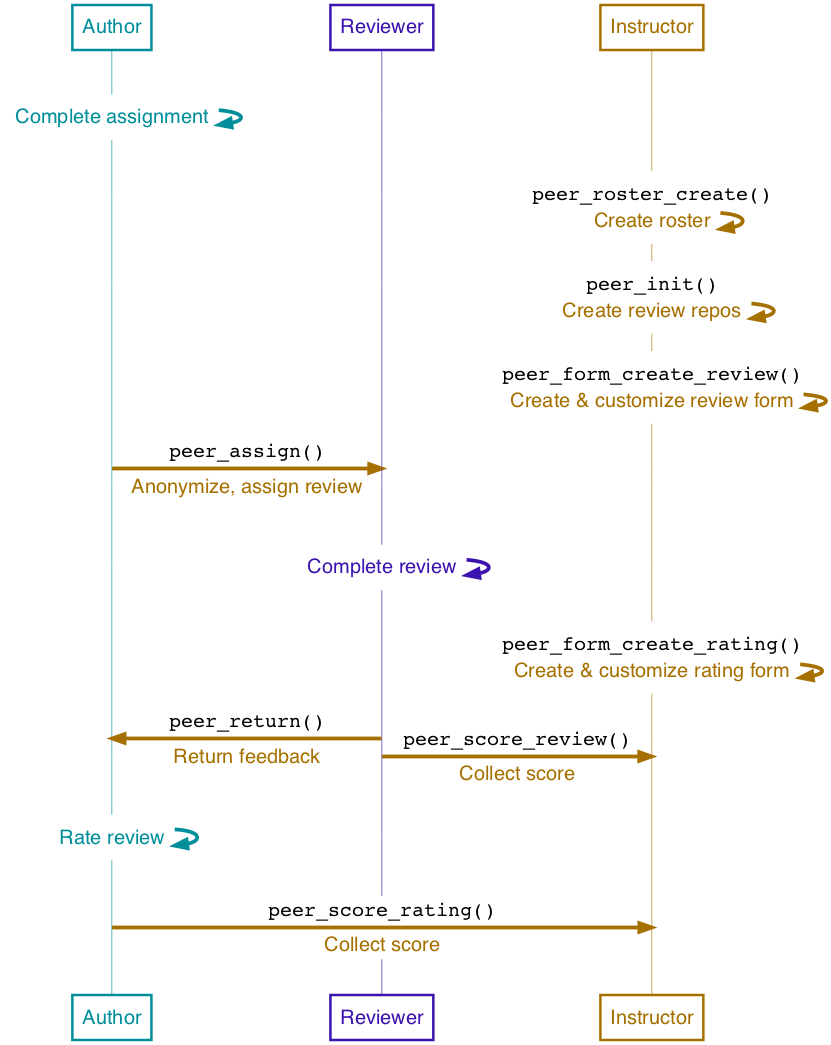

The peer review process with ghclass has three stages.

- Initiation: Create an assignment-specific roster and review repos for all students.

- Assign review: Create and customize review forms, distribute review forms and authors’ assignments to reviewers, students complete review.

- Return review & rating: Create rating form, return review to authors, optional: students rate reviews.

Initiation

Create review repositories

The peer_init() function initiates peer review repositories for each user. This is done to ensure that authors’ assignments do not permanently live on the repositories in which reviewers created their assignment. peer_init() creates a review repository for each user, adds users to the respective repositories, and applies peer review labels to all repositories (i.e. assignment and review repositories). The repositories are identified via the tag “-review” being automatically added to the instructor-specified prefix or suffix parameters.

peer_init(org = "ghclass-vignette", roster = roster, prefix = "hw1-")Assign reviewers

Create and customize feedback form for reviewers

Instructors can use the peer_form_create_review() function to create a blank feedback form and save it as an RMarkdown (.Rmd) file.2 Instructors can specify the number of questions to be included in the blank form. The function automatically creates q*_score parameters for each question in the YAML header of the RMarkdown document. Students are extracted to use these q*_score fields in the YAML to record the scores they give authors for their assignement. These YAML parameters are later used to extract and save student scores.

By default, the files contain an author field in the YAML header to prompt reviewers to identify themselves. Keeping reviewers non-anonymous (as opposed to authors) is likely to discourage overly harsh or rude reviews. If reviewers should instead stay anonymous, setting double_blind = TRUE will remove this field.

peer_form_create_review(n = 2, double_blind = TRUE, fname = "feedback_blank_review")The blank form is saved in the working directory, unless otherwise specified. The instructor can then customize the questions to be answered by reviewers.

Return review & rating

Collect scores given by reviewers

peer_score_review() collects the scores given by reviewers to authors. We recommend keeping the default setting of write_csv = TRUE to save the output as a .csv file in the working directory.

peer_score_review( org = "ghclass-vignette", roster = roster_test, prefix = prefix, form_review = "hw2_review.Rmd", write_csv = TRUE )

Double blind vs. single blind review

The double_blind parameter is used to determine whether authors know who reviewed their assignments (double_blind = FALSE), or whether the entire review process is anonymous (double_blind = TRUE). The default is a double blind review process, however, keeping reviewers non-anonymous might discourage overly harsh or rude reviews. Authors always remain anonymous to reviewers.

If instructors choose to conduct a double blind review process, they should inform students that while their name will be hidden from the students whose assignments they are reviewing, they are not anonymous to the instructor.

This parameter should be set once for each assignment and then passed to each of the functions in the return review process. double_blind determines the naming of folders on authors repositories. Changing from a setting of double blind to single blind review, or vice versa, in the middle of the return review process will prevent peer_return() or peer_score_rating() from locating the correct files on reviewers’ and authors’ repositories.

Issues and recommendations

Peer review is great! It gives students a chance to learn from each other, get meaningful feedback on their assignment, and it reduces the overhead of grading in large classes. However, asking students to review each others’ work also increases their workload. Thus, instructors should carefully consider

- the amount of peer-reviewed homework assignments in each course,

- the number of reviewers per assignment, and

- the timing of deadlines for the assignment and reviews.

Number of homework assignments

Writing a meaningful review with constructive comments is a learning process and it takes time! Thus, when implementing peer review in a course, instructors should consider reducing either the number or the scope of assignments to give students sufficient time to complete and engage with reviews.

Number of reviewers

On one hand, more reviewers means more feedback and less weight to outlier reviews for grading, so more is better, right? On the other hand, more reviewers also means more student hours spent on giving feedback and not working on their final projects, learning new skills, or recharging. Plus, how much different will the feedback from each additional reviewer be after two or three reviews? Thus, we recommend to assign a maximum of two or three reviewers per assignment to balance the benefits of having multiple reviews and the demand on students’ time/diminishing returns.

Timing

Instructors should carefully consider the timing of deadlines for a) the assignment, b) the completion of the review, and c) the completion of the author rating of reviewers’ feedback, if applicable.

Suppose you wanted to give students the weekend to complete assignments, your class meets once a week, and you have one homework assignment per week. As shown in the table below, the review process for an assignment given in week 1 won’t be completed until three weeks later. Instructors should also keep in mind that based on this timeline, starting in week 3, students will juggle parts of the assignments from weeks 1, 2, and 3 (in three separate repositories).

| Week | Assignment | Completed |

|---|---|---|

| 1 | a) Homework (HW) 1 | |

| 2 | a) Review of HW 1 b) HW 2 |

a) HW 1 |

| 3 | a) Rating of review on HW 1 b) Review of HW 2 c) HW 3 |

a) Review of HW 1 b) HW 2 |

| 4 | … | a) Rating of review on HW 1 b) Review of HW 2 c) HW 3 |

| … | … | … |

API rate limits

The GitHub API that is used to access information on students’ repositories has maximum hourly rate limits. The baseline limit is 5,000 calls to the API per hour. With two reviewers per assignment and 2 files to be moved when returning the review, this hourly limit is reached by peer_return() after 294 students.

A custom user-generated peer review roster should follow the format of the roster generated by

peer_roster_create()to be correctly processed. It should contain a columnuserto capture GitHub user names,user_randomfor anonymized user IDs, and arev*column for each reviewer that is assigned to review the user’s work.↩︎Alternatively, you can access a reviewer feedback form template via

File>New File>R Markdown>From Template>Reviewer feedback formafter installing and loading theghclasspackage.↩︎Alternatively, you can access an author rating form template via

File>New File>R Markdown>From Template>Author rating formafter installing and loading theghclasspackage.↩︎The rating categories are based on Reily, K. and P. Ludford Finnerty, and L. Terveen (2009): Two Peers Are Better Than One: Aggregating Peer Reviews for Computing Assignments is Surprisingly Accurate. In Proceedings of the ACM 2009 International Conference on Supporting Group Work. GROUP’09, May 10–13, 2009, Sanibel Island, Florida, USA.↩︎